Table Of Content

If you want to analyze unstructured or semi-structured data, the data warehouse won’t work. We’re seeing more companies moving to the data lakehouse architecture, which helps to address the above. The open data lakehouse allows you to run warehouse workloads on all kinds of data in an open and flexible architecture.

Data warehouse system analysis and data governance design

Furthermore, business analytical functions change over time, which results in changes in the requirements for the systems. Therefore, data warehouse and OLAP systems are dynamic, and the design process is continuous. You can adhere to this principle by following incremental development methodologies when building the warehouse to ensure you deliver production functionality as quickly as possible. Following Kimball’s data mart strategy or Linstedt’s Data Vault data warehouse design methodologies will help you develop systems that build incrementally whilst accounting for change smoothly. Use a semantic layer in your platform such as a MS SSAS cube or even a Business Objects Universe to provide an easy-to-understand business interface to your data. In the case of the former, you will also be providing an easy mechanism for users to query data from Excel—still the most popular data analytics tool.

Supero™ Gaming Solutions

How to Use the BEAM Approach in Data Analytic Projects - Towards Data Science

How to Use the BEAM Approach in Data Analytic Projects.

Posted: Sun, 20 Jun 2021 23:36:45 GMT [source]

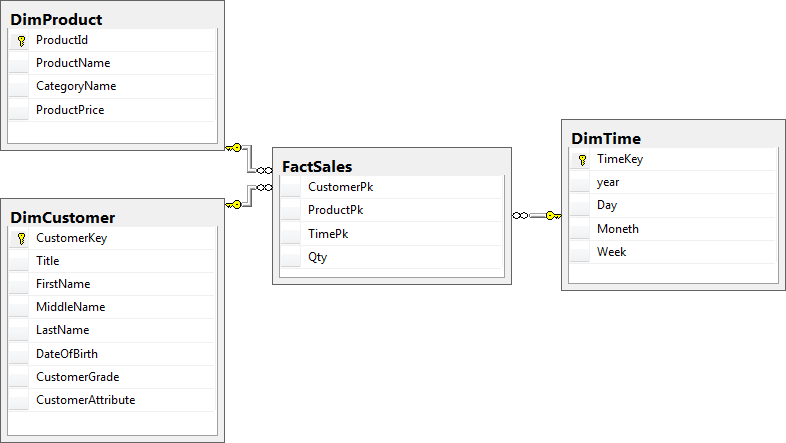

The term “data mart” is one you might hear when working with data warehouses. It can store an entity, such as a customer or product, or it can store a concept such as a date. They are not normalised as they contain a lot of redundant data, but this is OK as it is a deliberate design that helps to speed up SELECT queries.

When will I have access to the lectures and assignments?

A data warehouse system enables an organization to run powerful analytics on large amounts of data (petabytes and petabytes) in ways that a standard database cannot. You design and build your data warehouse based on your reporting requirements. After you identified the data you need, you design the data to flow information into your data warehouse. Incorporate data lineage and auditing features in your data warehouses to track changes, maintain data quality, and comply with regulations.

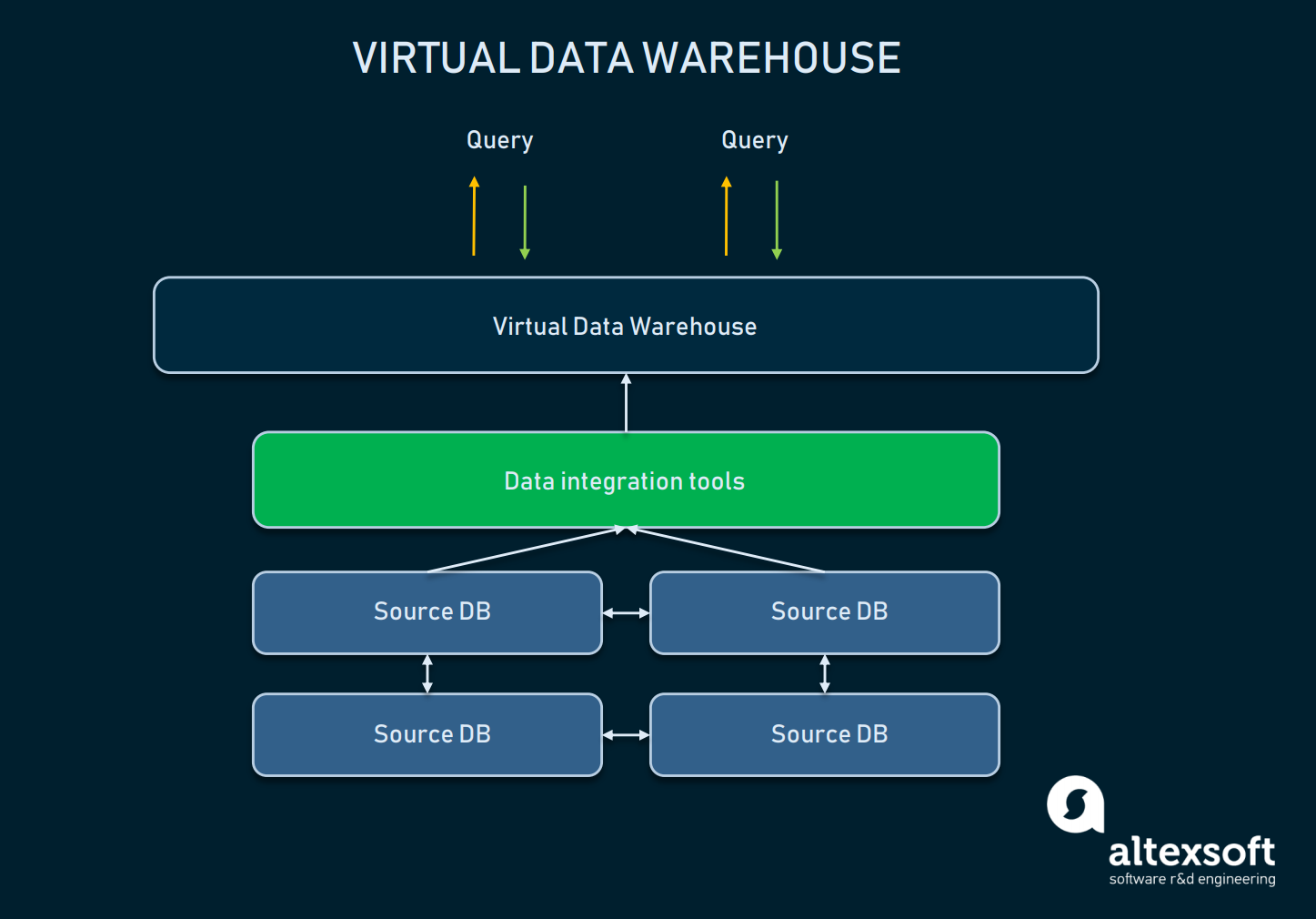

Its counterpart Extract, Load, Transfer (ELT) negatively impacts the performance of most custom-built warehouses since data is loaded directly into the warehouse before data organization and cleansing occur. However, there might be other data integration use cases that suit the ELT process. Integrate.io not only executes ETL but can handle ELT, Reverse ETL, and Change Data Capture (CDC), as well as provide data observability and data warehouse insights. It involves labeling to map the volume of columns to their physical location. The next factor in choosing data warehouse design is the complexity of data sources.

Query Optimization Techniques for Complex Analytical Queries

It results in a single data warehouse without the smaller data warehouses in between, but it may take longer to see the benefits. The design is made to optimise the performance of SELECT queries across more data. The INSERTs and UPDATEs happen rarely (often overnight as a big batch) and the SELECT statements happen all the time. Chamitha is an IT veteran specializing in data warehouse system architecture, data engineering, business analysis, and project management. Analysis tools can give decision-makers insights into the integrity of their data that manual labour can’t replicate, but the process of quality assurance is costly and labour-intensive. If a data warehouse is like an ocean of data—vast, diverse, and containing data from all sources—a data mart is like a river—based on a single ecosystem and all flowing in the same direction.

The top-down approach is the most common way for large organisations to build data centres. Since it’s a strong, high-integrity model and data marts can be easily created from the warehouse, it’s a natural fit for organisations with large data needs. However, the expense of upkeep and maintaining an increasingly complex system is prohibitive for some organisations. In contrast to Inmon’s approach, the data warehouse contains a web of data marts. Data is organised into “star schemas” where data items are used consistently across different data marts. Importantly, these logical models are normalised (the process of structuring databases according to specific norms), and the physical data warehouse is built to reflect the normalised structure of the warehouse.

MarkLogic is a fully managed, fully automated cloud service to integrate data from silos. When you first hear the term "data warehouse," you might think of a few other data terms like "data lake," "database," or "data mart." However, those things are different because they have a more limited scope. It includes primary and foreign keys, as well as the data types for each column. This means that the requirements are gathered and data warehouse is developed based on the organisation as a whole, instead of individual areas like the Kimball method.

Data Warehouse Design Practices and Methodologies

The Essential Data Warehouse Developer Responsibilities to Know - Solutions Review

The Essential Data Warehouse Developer Responsibilities to Know.

Posted: Fri, 10 Mar 2023 08:00:00 GMT [source]

At the end of this module, you will have solid background to communicate and assist business analysts who use a multidimensional representation of a data warehouse. To complete this module, you should proceed to the assignment and quiz involving WebPivotTable. OLAP (Online Analytical Processing) cubes are commonly used in the data warehousing process to enable faster, more efficient analysis of large amounts of data. OLAP cubes are based on multidimensional databases that store summarized data and allow users to quickly analyze information from different dimensions. ETL will likely be the go-to for pulling data from systems into your warehouse.

Combining on-premises and cloud data warehouses can build a hybrid data warehouse that leverages the strengths of both systems. It focuses on denormalizing data to create more efficient and easily accessible data models. It comprises fact tables and dimension tables that are joined in a simplified manner. Defining these controls is important to maintain data integrity, ensure the data warehouse system is secure, and ensure only authorized users access the data. Design well-structured and well-documented data models, and you are halfway through having efficient new data warehouses. ScienceSoft’s data warehouse team is ready to design a cost-effective and high-performing data warehouse solution within the set time and budget frames, applying data warehouse design best practices.

For example, if a department accesses one type of data from a data silo and another type from a data warehouse, it will lead to confusion, redundancy, and inefficiency. Each data mart should be implemented in its entirety as data is gradually migrated. The key functions of data warehouse software include processing and managing data so that meaningful insights can be drawn from the raw information.

A data warehouse allows you to do the kind of advanced data mining and analytics that would be impossible to do manually. Finally, in order for your data warehouse to be implemented effectively, it’s important to train users on how to navigate and use the new system. Training large groups of users is difficult for any industry, but once they see what the data can do, most users will be as excited about the change as you are. Then, it’s time to set up the automation processes for any redundant, click-intensive tasks.

Once the data warehouse is deployed, it is essential to establish a maintenance plan to ensure it continues functioning smoothly and providing value to the organization. Maintenance tasks may include monitoring performance, updating software and hardware, query optimization, and ensuring data quality. It's important to regularly examine and revise the data warehouse design to ensure it meets the organization's changing needs.

Thirdly, carefully selecting and defining dimensions and hierarchies based on the business context and user requirements will enhance the usability and effectiveness of the dimensional model. With regards to the reporting layer, visualization tools would offer certain functionalities that aren’t readily available in others—e.g., Power BI supports custom MDX queries, but Tableau doesn’t. My point isn’t to advocate the desertion of stored procedures or the avoidance of SSAS cubes or Tableau in your systems. My intention is merely to promote the importance of being mindful in justifying any decisions to tightly couple your platform to its tools. Data warehousing systems have been a part of business intelligence (BI) solutions for over three decades, but they have evolved recently with the emergence of new data types and data hosting methods.

ETL tools, such as Informatica PowerCenter, Microsoft SQL Server Integration Services (SSIS), and Talend, provide graphical interfaces and pre-built components for designing and executing ETL workflows. These tools automate many of the manual tasks involved in data extraction, transformation, and loading. Additionally, data integration platforms, such as Apache Kafka and Apache Nifi, offer real-time streaming capabilities that enable near-instantaneous data updates and integration. Choosing the right tools and techniques depends on the specific requirements and resources of your organization. The primary goal of data warehousing is to provide decision-makers with timely and accurate information to support data-driven decision-making.

No comments:

Post a Comment